In this blossoming era of AI, efficient computational approaches to processing and storing large amounts of data are required. However, current computer designs have inherent performance limitations.

In recent years, research has been focused on the development of alternative computing architectures that mimic the brain. These devices, called neuromorphic computers, circumvent many of the issues associated with the traditional von Neumann architecture, which has been around since 1945 and is composed of processing and memory units.

These units are physically separated and thus data must travel between them through a set of wires or conductors called the “memory bus”. This slows down the speed of the whole computing system, consumes a large amount of power, and is a major barrier to efficient performance.

The field of neuromorphic computing has exploded in the last decade, circumventing these issues through an integrated unit in which both memory storage and computations are combined — hence the name “in-memory computing”. With memory cells and processing units that are analogous to the biological synapse and neuron, this new architecture circumvents the long distances that data must travel in traditional computer architectures.

However, most in-memory computing relies on a concept called resistor-based memory, in which data is stored and processed using controlled electrical resistance. While this enables brain-like memory processing, these devices still suffer from a number of setbacks including high-energy requirements and a complicated system set up.

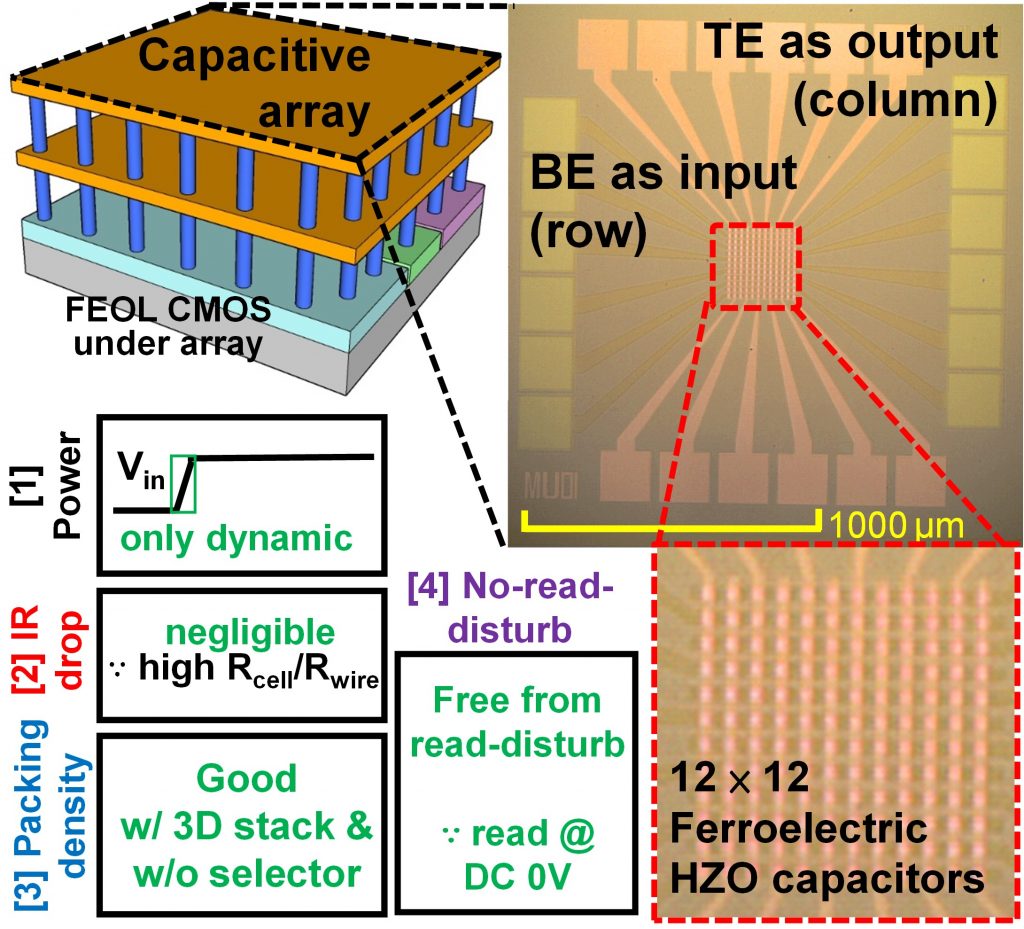

Researchers led by Shimeng Yu from the Georgia Institute of Technology sought to get around these issues by developing a novel type of electrical artificial synapse that runs on capacitor-based memory.

Capacitors record and store data as an electrical charge. In addition to requiring less power to operate, they also have the added advantage of being non-conductive, meaning the electrical charges cannot easily penetrate the capacitive synapse. This avoids what researchers call a sneaking leakage current, which has been a chronic problem in artificial synaptic systems for years.

Without the problem of a sneaking leakage current, there is no need for an additional circuit component called a “selector”, which minimizes leakage. Selectors can only be incorporated into the bottom layer of a computer chip due to its fabrication requirements, making the vertical stacking of an artificial synapse extremely difficult. These designs yield higher storage density and better performance. The challenge has been in finding the right material to do this.

Using hafnium oxide, a material long used in the semiconductor industry, the team was able to create the capacitive artificial synapse. The material showed different capacitance values depending on the electrical charges stored in it, and the fact that it is broadly used means commercial translation of this technology could be easily facilitated.

The capabilities of the new hafnium-based capacitive synapses were demonstrated in a system-level performance test at the array level, indicating its potential real-world applications.

Although this a new synapse was successful on a systems level, there is still room for improvement, said the team. For example, it still needs to be scaled down to a few 10-100s of nanometers, which is within the range of current fabrication guidelines. This scale is equivalent to approximately 1000-10000 times thinner than the human hair. Additionally, further structural modification or device geometry engineering of the capacitors can achieve a more robust synapse with reliable data states.

While this novel (though immature) technology could achieve comparable or even better performance compared to mature technologies of other synaptic arrays, it will be exciting to investigate and further optimize capacitive synapse device structures and circuitry to continue to improve performance of in-memory computing.

Written by: Jae Hur

Reference: Jae Hur, et al., Non-volatile Capacitive Crossbar Array for In-memory Computing, Advanced Intelligent Systems (2022). DOI: 10.1002/aisy.202100258

Disclaimer: The author of this article was involved in the study