How do we take the next step in computing? When can we stop teaching our computers using software and programming? Can we build a network of parts that make a greater whole? A whole in which new and unexpected patterns of learning and prediction emerge, like the spontaneous movements of flocking birds.

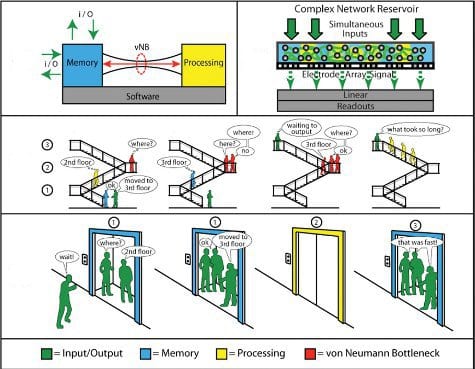

Conventional computing is slowed down by log-jams at the “von Neumann bottleneck”, as instructions and data are shuttled back and forth between memory and processor cores. In a recent paper Adam. Z. Stieg and co-workers explain how a promising new type of computing apes biological systems and avoids information bottlenecks. Reservoir computation is an extension of research into artificial neural networks, in which software running on conventional computer systems mimics information processing in natural systems. In reservoir computing, simultaneous input signals interact with eachother and with the network (the reservoir); the output is a change in the state of the system. The reservoir acts as a “black box”. It is constantly dynamically modified by the inputs and retains temporal information, a fading memory of previous signals. The reservoir is poised between simply periodic and wildly unpredictable oscillations and, as the authors write, operates at the edge of chaos. Only such a dynamic evolving system can generate emergent phenomena such as intelligence and learning.

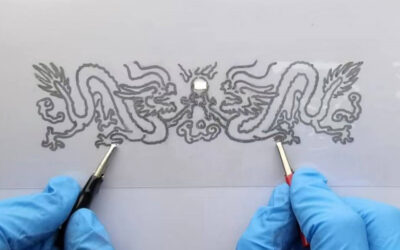

Reservoir computing has generally focused on simulation and modeling using traditional computational architectures. However, in the long-term, a new type of computer hardware must be developed, a complex wiring architecture whose non-linear properties emulate a neural network. The authors propose that the combination of directed assembly and self-assembly of nanoscale building blocks can add the right degree of randomness to the network without compromising on function. By combining lithography techniques with solution phase electrochemistry, the authors generated a complex network of randomly distributed, highly interconnected inorganic synapses. This electrical activity of this network exhibited emergent behavior.

Research into neural networks show that there are spatiotemporal relationships between subunits; each part of the system is in communication with every other part of the brain, for every time of its history. The influence of past events decays slowly. These interconnections are the basis of learning and memory.

Such are the dynamics that could see new behavior emerging from synthetic computing networks. A next generation of computing may be eminent.