It’s the golden age of deep learning as evidenced by breakthroughs in different fields of artificial intelligence (AI), such as facial identification, autonomous cars, smart cities, and smart healthcare. In the coming decade, research will equip existing AI techniques with the ability to think and learn as humans do — a skill that will be crucial for computational systems and AI to interact with the real world and process continuous streams of information.

While this leap is appealing, existing AI models suffer from performance degradation when trained sequentially on new concepts due to the memory being overwritten. This phenomenon, known as “catastrophic forgetting”, is rooted in what is termed the stability-plasticity dilemma, where the AI model needs to update its memory to continually adapt to the new information while maintaining the stability of its existing knowledge. This dilemma hinders the existing AI from continuously learning through real-world information.

Although several continual learning models have been proposed to solve this problem, performing them efficiently on resource-limited edge computing systems — which move computing from clouds and data centers as close as possible to the originating source, such as devices linked to the Internet of Things — remains a challenge because they usually require high computing power and large memory capacity.

Meanwhile, conventional computing systems are based on the von Neumann architecture, which requires massive data transfers and considerable energy and time overheads due to the physical separation of memory and central processing unit (CPU).

A continual learning model

Dashan Shang, a professor at the Institute of Microelectronics, Chinese Academy of Sciences, along with group members Yi Li and Woyu Zhang, and Zhongrui Wang from the University of Hong Kong have recently developed a prototype to realize an energy-efficient continual learning system. Their findings were recently published in the journal Advanced Intelligent Systems.

Inspired by the human brain, which itself practices lifelong continual learning, the researchers proposed a model inspired by metaplasticity they called the mixed-precision continual learning model (MPCL). Metaplasticity refers to activity-dependent changes in neural functions that modulate the difficulty level of changing the strength of connections between neurons, that is, synaptic plasticity. Since previously learned knowledge is stored through the synaptic plasticity, metaplasticity has been viewed as an essential ingredient in balancing the forget and memory.

“To mimic synapses’ metaplasticity, our MPCL model adopts a mixed-precision approach to regulate the degree of forgetting in order to update only the memory that is tightly correlated to the new task,” Shang said. “Thus, we effectively balance forgetfullness and memory, avoiding massive amounts of memory being overwritten during continual learning, while maintaining the learning potential of new knowledge.”

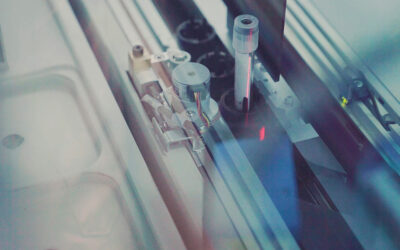

In-memory computing is a technique for future computing, where the calculations are run in memory components to avoid data transfer between separate memory and CPU units. Following in-memory computing, the researchers deployed their MPCL model on a resistance random-access-memory (RRAM) based in-memory computing hardware system. The RRAM refers to a crossbar array chip consisting of millions of resistor-tunable devices, which can simultaneously store and process data by modifying their resistance, minimizing the energy and time needed for data transfer between processor and memory. Meanwhile, the RRAM can reduce computational complexity by simply exploiting physical laws, such as Ohm’s law and Kirchhoff’s law, used for multiplication and addition, respectively.

Therefore, the RRAM-based in-memory computing provides a promising solution for edge computing systems to implement AI models. “By combining the metaplasticity with RRAM-based IMC architecture, we developed a robustness, high-speed, low-power continual learning prototypical hardware system,” said Li, the study’s first author. “By a well-designed in-situ optimizations, both high energy efficiency and high classification accuracy for five continual learning tasks have been demonstrated.”

Next, the team plans to further expand the adaptive capability of the model to enable them to autonomously process on-the-fly information in the real world. “This research is still in its infancy, involving small-scale demonstrations,” added Shang. “In the coming future, we can expect that adoption of this technique will allow edge AI systems to evolve autonomously without human supervision.”

Reference: Yi Li, et al., Mixed-precision continual learning based on computational resistance random access memory, Advanced Intelligent Systems (2022). DOI: 10.1002/aisy.202200026

Feature image credit: david latorre romero on Unsplash