It is probably safe to say that the research enterprise, especially in academia, will see profound changes in the next decade. As outlined in the Nature commentary by Whitesides and Deutsch,1 part of the reason will be the changing accountability towards tax payers. As life is becoming tougher for the average citizen of the western world, the research enterprise represents a uniquely vulnerable target (in certain countries more than others) for the increased thirst for accountability. Ultimately, different people have different angles on this, but the trend appears robust and enabled by the lack of complexities that accompany any aggression to the ivory tower. The repercussions of cutting science budgets are less politically complex to manage than cutting other types of inefficiencies that have, in many cases, “political immune systems”. Our community of scientists is (or act as if we would be) oblivious to these dynamics and is usually contented to claim that we ought to be supported due to the very simple and self-evident (to us scientists at least…) fact that research and development, in one form or another, are the reason why we are not living in caves anymore. This unwillingness to engage with all the complexities of science’s place in society, makes most of us blind to the long term repercussions of our modus operandi. Ironically, the class of people that most prides itself for having a long term vision, is, in my view, becoming victim of a shortsightedness that, as I will try to postulate here, will bring havoc to our most valuable asset: credibility.

It is probably safe to say that the research enterprise, especially in academia, will see profound changes in the next decade. As outlined in the Nature commentary by Whitesides and Deutsch,1 part of the reason will be the changing accountability towards tax payers. As life is becoming tougher for the average citizen of the western world, the research enterprise represents a uniquely vulnerable target (in certain countries more than others) for the increased thirst for accountability. Ultimately, different people have different angles on this, but the trend appears robust and enabled by the lack of complexities that accompany any aggression to the ivory tower. The repercussions of cutting science budgets are less politically complex to manage than cutting other types of inefficiencies that have, in many cases, “political immune systems”. Our community of scientists is (or act as if we would be) oblivious to these dynamics and is usually contented to claim that we ought to be supported due to the very simple and self-evident (to us scientists at least…) fact that research and development, in one form or another, are the reason why we are not living in caves anymore. This unwillingness to engage with all the complexities of science’s place in society, makes most of us blind to the long term repercussions of our modus operandi. Ironically, the class of people that most prides itself for having a long term vision, is, in my view, becoming victim of a shortsightedness that, as I will try to postulate here, will bring havoc to our most valuable asset: credibility.

A trend that hasn’t been discussed sufficiently in my view, is our society’s shift towards transparency. For better or for worse, because of technological developments, what we do and what we say are becoming increasingly easier to monitor and observe and frighteningly easier to broadcast for the world to see. This has, of course, led to an increasing volume of information to which our society has become addicted. As all addicts could testify, what gave you a high a year ago is not sufficient anymore. In the same manner our society has become more and more receptive to scandals, negativity and buzz. The increasingly excitable nature of society is driving viral patterns. The way in which science is covered in the news is an example of this. The facility with which information is broadcast makes “news” extremely easy to produce to the voracious public.

How does this connect to science and to what we do?

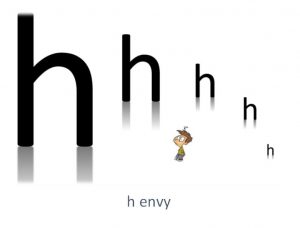

We are exposing science by de facto embracing a model of accountability that we should be (at least from an historical and philosophical perspective) concerned with, the model of “publish or perish”. This has been replaced by an even less sophisticated incarnation represented by the h-index which is defined in the following way: “an author-level metric that attempts to measure both the productivity and citation impact of the publications of a scientist or scholar. The index is based on the set of the scientist’s most cited papers and the number of citations that they have received in other publications”.2 Some however believe that publication numbers are just as important as h. Proponents of the h-index would say that the h-index is the antidote to our obsession with publication numbers without realizing that it didn’t work this way. While “publish or perish” could have been interpreted constructively (as Faraday did) to mean that finished work must find its way to the public or it might as well not exist, the current model leaves no room for interpretation: here is the handle and here is how to crank it. Now crank it!

Unfortunately, we are showing poor vision by not realizing that these numbers, if held to any value, become metrics that are now extensively used by policy-makers to calculate return-on-investment on research, quantify opportunity costs and applying economics to science in an effort to improve efficiency (however that is calculated) through the principles of capitalism. How does one assess return on investment on time scales where the research outcomes are still in the process of being digested, appreciated and understood by the community? The cycle where a politician is in office is 3-7 years whereas the time for a scientific topic to mature is 1-1.5 decades. While I am not an economist I am concerned that the principles of capitalism might not hold water when value cannot be quantified precisely (as it is the case of knowledge creation as well as education). Even if we decide to embrace a business approach to scientific research, we should probably remember Goodhart’s law in economics3 that “when a measure becomes a target, it ceases to be a good measure” or Campbell’s law in sociology4 that “the more any quantitative social indicator (or even some qualitative indicator) is used for social decision-making, the more subject it will be to corruption pressures and the more apt it will be to distort and corrupt the social processes it is intended to monitor.”

Yet, this is not what I am most concerned about. The backbone of science is trust. There is nothing else, really. The entire system is based on it, starting from the scientific method and the concept of reproducibility. Reproducibility, brought up by Robert Boyle as a concept in the 1660s, set a gold standard for scientific experimentation, i.e., all the empirical findings, arising from observing nature and drawing conclusions, must be verified by independent replications. In this sense, researchers could trust the scientific publications providing sufficient detail regarding protocols, procedure, equipment and observations. This is the narrow sense. On the other hand, and more generally speaking, trust is also a fundamental part of the leverage that we still have on the public. Many are willing to pay for accurate information about what to do and what not to do. Anybody who dealt with extension services of universities, and the role universities have in advising the population can appreciate this. In a world like ours subject to an overdose of information, I expect trustworthiness to become an increasingly valuable currency, and one that could, if protected, help sustain funding to science.

But in our search for fairness and feelings of accomplishment we have got ourselves in a pit from which it will be hard to get out. The pressure that used to be exerted among us to publish more and in better journals has become now a pressure from the outside. And as the basic tenets of competition dictates, the bar on whatever metric you have chosen constantly moves upward. But as we all know, the bar for productivity and quality cannot keep rising infinitely. The system is rigged, unsustainable, and most of us have the nagging feeling that the quality of publications is not being maintained or even going down. At the same time, this has increased the pressure on the youngest members of our community to the point that it is becoming almost common for assistant professors in high intensity research-driven universities to divorce due to stress during the time they are striving to achieve tenure.

Most troubling is the fact that, in a search for the quick publication (and anyone who has tried to tackle real scientific problems know how ludicrous this notion really is) and the high impact factors, I have started noticing, beside the well-known drastic increases in the reported cases of scientific fraud, an increase in what we would probably consider (in a very academically appropriate way) “misrepresentation”. I am not alone with this observation.5 Deceptive citations, suggestive writing and other dubious strategies, are becoming increasingly common (at least in my recent experience) in the literature to ignore, deemphasize, mislead, slant or obfuscate prior work in order to claim more impact for the publication at hand. Of course, these cases are never going to be indicted as fraud because they are not frauds. The data is real, the work is real, and the necessary citations are somewhere in the references. But the devil is in the details, isn’t it?

I have been around long enough to know that this type of behavior is as old as the world. I know many of my colleagues would argue one of four things: i) this has always happened and it has never compromised science, ii) that science will eventually self-correct and that the really important claims will be double checked (everything else will not be checked because it doesn’t matter), iii) that I should not be talking about this and disturb the peace, and (this for the more feisty ones) iv) that I might be bringing this up because I must be concerned about my h-index and making sure I can always find someone whose impact is lower than mine.

Furthermore, the situation will get worse with the changes in teaching methods to ones which are more internet-based. There be less personal contact and less chance to assess a student through day-to-day contact. There was also recently a disconcerting story about an epidemic of student cheating in British Universities which made the front page of The Times: “Almost 50,000 students at British universities have been caught cheating in the past three years amid fears of a plagiarism “epidemic” fueled disproportionately by foreign students.” 5 While this number only represents about 2% of the British student population and probably underestimates the actual amount of cheating, it gives concern that the problems faced at the research level in universities is not going to go away, but only get worse, unless tough action is taken. Universities, unfortunately, are perhaps unintentionally incentivized to turn a blind eye to this type of behavior (bad PR, lost funding, etc…).

My take on this is that these arguments fail to consider the changing landscape of science in society. The increased transparency that is happening will make these type of behaviors increasingly evident and increasingly known to the public. The chief editor of Angewandte Chemie International Edition has echoed these concerns. His view is that unacceptable practices, admittedly amongst “black sheep”, are leading to a general suspicion of scientists that is spreading through the offices of journals and funding organizations6.The cost in terms of lost trust will be, I fear, horrific with very serious repercussions for our ability to provide our best and brightest with the resources we have been given and entrusted with to do research.

Here is what I think we could do, as a community, to help buck this trend. I take inspiration from previous comments by Whitesides and Deutsch1 and Heller7 who have written about some of these issues with greater clarity and diplomacy than I ever could.

- Shift the focus from “how much” and “where” to “what”. At my age, I find frustrating how difficult it is for our community to focus on the hard questions. And one of those hard questions is “what have you discovered?” Few of us ever like to be asked that. You’ll not find this question in any grant application, and you’ll hardly ever hear it uttered in conferences, if not with ill intent. It should not be so. Shifting the focus towards the problems and how our work is targeting its solution will do, I believe, wonders in reprioritizing how research funding is being spent, what projects are being funded, and what scientists we should follow. This should prompt all scientists, old and young, to pursue the hard problems. It would prompt them to answer the What? question with what they are trying to achieve. It would make them more sensitive about the quality of the problem they are trying to solve rather than whether the problem can lead to fast publications. Most research, if one really scrutinizes it carefully, is not even incremental, but it is still publishable. If we compare a research problem to climbing Everest, transformative research will be the one that finds new routes to the top. Incremental research, almost as noble, in its genuine incarnation, uses an understood route and puts one foot after the next for those who will follow. The “still publishable” type of research runs in circles around the base camp, claiming that their scouting will somehow help others make it to the top.

- Debate rather than appease. Conferences used to be places where research findings and data were discussed and fleshed out. Quantum mechanics was discovered and proposed by Planck at a conference. Regardless of how ridiculous the idea of quantized energy levels would have sounded to many. There is immense value to creating environments such as those. But to do that we have to promote a culture in which asking a challenging question at a conference should be the norm rather than the exception. And that when this happens the questioner should not be viewed as a disturber of the peace. Questioning an interpretation is not a measure of disrespect. Failing to question a dubious interpretation or claim should be. Questioning a result or interpretation and disrespecting its author are mutually exclusive. I can’t stress how important this attitude should be for our duties in educating the young scientists of tomorrow. On the other hand, I do not believe that blogs, twitters, and internet forums qualify as suitable venues for such discussions. Even with moderation, these formats are especially subject to disruption, manipulation, and the relentless force of consensus.

- While society’s shift towards transparency can be used by researchers in a positive way to promote the benefits of the research enterprise, I think that too much talk via internet avenues can be counter-productive. Actions speak better than words. It is better to go out and “do it” and then you can talk about the successful solutions which have helped to promote a better society to live in. For young scientists who have not yet had chance to do anything on their own, they need to talk about what they are trying to achieve. In both cases, a funds provider is faced with the question whether the researchers have the ability to solve the problem and must also take into account, as part of its decision-making process, whether the researchers have those subjective factors such as trust, integrity and drive to achieve a solution. Those attributes will always be a vital asset which must be protected and nurtured on both sides.

In closing, as it is becoming customary these days, I should now offer some measure of apology for the opinions expressed. But I won’t do so, as these opinions originate from a concern for the state of science and the future of our best and brightest. I will apologize, however, that the manner in which these opinions have been laid out might not be the clearest or the most savory.

References

- Whitesides, G.M., Deutsch, J., Nature, 2011, 469, 21-22.

- h-index, https://en.wikipedia.org/wiki/H-index.

- Goodhart, C., https://en.wikipedia.org/wiki/Goodhart’s_law.

- Campbell, D.T., https://en.wikipedia.org/wiki/Campbell’s_law.

- The Times, 2 January 2016, http://www.thetimes.co.uk/tto/education/article4654719.ece.

- Golitz, P., Angew. Chem. Int. Ed., 2016, 55, 4-5.

- Heller, A., Angew. Chem. Int. Ed., 2014, 53, 2-4.