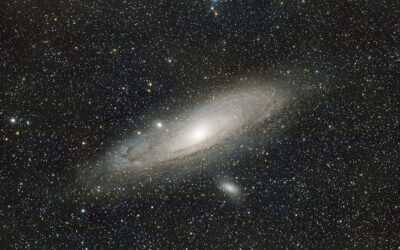

When you lay back and look at the night sky, whether in the heart of a city or the depths of the countryside, the first thing that strikes you is the sheer number of stars. Those visible to the naked eye all belong to our own galaxy, the Milky Way.

Our galaxy is believed to be nearly as old as the Universe but, unlike a lot of nearby Universe, it is also teeming with newly formed stars. It is home to regions like the Carina Nebula, that hosts stars that are hundreds of times the mass of the Sun and the Orion Nebula, which has created tens-of-thousands of stars in the past ten million years.

With the number of actively star-forming galaxies believed to be around six times lower than galaxies with little star formation in the nearby Universe, this high level of activity in the Milky Way is rare. This is part of a wider picture in which astronomers are discovering that the rate at which stars are formed is dropping, and they want to know why.

How are stars formed?

Most of our understanding of a star’s lifecycle comes by observing those that are born and die within the Milky Way.

Stars are born in cold clouds of gas and dust, called nebulae, found throughout most galaxies. Nebulae’s low temperatures (about 10K or -263°C) are necessary for hydrogen gas to clump together into high enough densities to begin the process of star formation. The clump’s gravity attracts more gas and as that material moves inward it gains energy from the motion. When the gas impacts the clump, all the energy from the motion is converted into heat. This process continues until its temperature reaches thousands of Kelvin and can ignite into a star.

Throughout the “main sequence”, the longest stable period of a star’s life, the star produces radiation and heat by fusing two hydrogen molecules into helium. Releasing this radiation maintains an outward pressure that counteracts the gravitational force from the mass of the star and produces most of a galaxy’s light.

More massive stars will use up their fuel quicker to oppose the larger gravitational force, but they also shine the brightest, emitting high-energy UV light. Lower mass stars have much longer lifetimes, are much dimmer, and emit low-energy infrared light. Most galaxies will host a range of stellar masses between around 0.1 to 10 times the mass of our Sun.

Once the hydrogen is used up, the star will enter the final stage of its life and transform into a degenerate star. For stars with a similar or lower mass to the Sun, it will no longer be able to produce radiation so the gravitational force will dominate, and the star’s matter will collapse into a white dwarf.

A similar collapse occurs in stars larger than the Sun but there is significantly more material burning at higher temperatures. So, this collapse creates a core capable of fusing heavier elements, starting with fusing helium into carbon. If the star is massive enough, this process can continue, creating extra layers which fuse elements as heavy as iron. Once the fuel in all the layers is used up, the outer layers collapse so quickly that they rebound off the core at close to the speed of light. This rapid ejection is known as a supernova. The ejected material is thrown into the surrounding gas and dust, helping to seed the next generation of stars, and leaving a neutron star or black hole.

How has star formation been changing?

The rate at which stars formed has not stayed the same. The Universe is producing nine times fewer stars now than at its peak, around 10 billion years ago. Our understanding of how star-forming activity has changed through cosmic time was crystallized in a landmark paper published almost a decade ago.

Piero Madau and Mark Dickinson compiled data from dozens of studies containing hundreds of thousands of galaxies measured at both infrared and UV wavelengths. It encompassed galaxies that neighbor our own all the way up to those observed in the early Universe, around 13 billion years ago.

The authors split this galaxy sample into groups based on their distance. With this information, they could observe how the distribution of galaxy brightness has changed throughout the lifetime of the Universe. And, since stars make up the bulk of a galaxy’s light in the infrared and UV, they could convert the brightness directly into formation rates using well-known equations.

These results cemented the idea that star-forming activity was very weak in the early Universe. Then, as gas became more concentrated in galaxies, it produced a rapid increase in star formation, until around 10 – 11 billion years ago, when it peaked.

From then on, star formation has rapidly declined. In the local universe, it has dropped to about nine times below the peak value.As a result, around half of the stars observed today were thought to have formed in the first five billion years since the Big Bang, but only a quarter in the most recent six billion years.

What has caused this?

Broadly, astronomers believe that the changing rate of star formation follows the availability of cold gas in the Universe. As galaxies begin to form, this gas becomes concentrated inside galaxies and allows star formation activity to peak. But then the gas starts to be quickly used up. As stars begin to die, the cold gas necessary for future star formation gets dispersed by supernovae and its chemistry changes, so we start to see a decline.

But understanding exactly how this gas becomes unusable is difficult as galaxies are complex networks subject to numerous internal and external forces. For example, when a supernova ejects material, the shockwaves could create enough turbulence for the gas to clump and trigger the next generation of star formation, but if it is too energetic, it could blow that same gas out of the galaxy.

This is just one aspect of a much larger problem: What happens if more than one supernova goes off at the same time or at different times? What about the other objects, like black holes, that can cause shockwaves? How do these processes affect the gas temperature? The list is endless.

One way to address these questions is using simulations. For example, the simulation EAGLE recreates the physics of galaxy formation and evolution within a volume of space that contains 10,000 Milky-Way-sized galaxies. It begins in the early Universe when everything is still very uniform. Stars, galaxies and other structures are then allowed to form, being driven by key cosmological parameters: the density of both dark matter and normal matter, and the rate at which the Universe expands.

Simulations are effective tools to test different models of star formation and how they interact with other galaxy processes. Astronomers can use them to play with the settings of a hypothetical universe to test properties such as the strength of a supernova and see whether it explains our current observations.

However, computational limitations mean that it can be difficult to precisely model these interactions across entire galaxies. Some assumptions have to be made, which are not always accurate.

Our assumptions have to be continually updated through new data from telescopes, such as the recently launched James Webb Space Telescope, which has a special focus on understanding the physics of star formation and will provide new insights to help improve our simulations.

Astronomers think it is unlikely that our understanding of the overall decline will change, but by effectively utilizing these simulations and observations, we can uncover more detail about exactly why star formation is declining.

What will happen in the future?

Some scientists are trying to comprehend what the Universe might look like should this declining trend in star formation continue. They believe that as the “Stelliferous Era” — this current period of star-forming activity — ends, fuel for star formation will effectively disappear.

As the remaining stars begin to die, the Universe would then be dominated by black holes and other degenerate stars. The Universe would become an even colder and empty place: the night sky would dim significantly, solar systems would no longer be able to host life as their central stars become significantly colder, and black holes would start to devour whatever material remains.

This future “Degenerate Era” is a bleak and fascinating topic to contemplate. Thankfully, it isn’t going happen any time soon. The Universe is currently around 13.7 billion years old but the degenerate era isn’t thought to begin until somewhere between 1 quintillion (1015, or 1 followed by 15 zeros) and 1 duodecillion (1039, or 1 followed by 39 zeros) years after the Big Bang. However, this is a speculative outcome of the ongoing decline in star-forming activity and there are many other bizarre possibilities for the ultimate fate of the Universe.

Another possible endpoint revolves around our lack of understanding about dark energy, the force which is causing the Universe to expand at an ever-increasing rate. If this trend continues and the Universe expands faster and faster, then dark energy could get so strong that it rips apart galaxies, stars and even matter until nothing is left, a scenario known as the ‘Big Rip’.

But if the expansion slows or stops, then gravity could start to dominate. Everything would start attracting each other until the whole Universe eventually collapsed in a ‘Big Crunch’. One variation of this scenario even suggests that the collapse could trigger another Big Bang and start a whole new Universe!

Putting these speculative scenarios aside, there is still much to learn about exactly why star formation is declining and what that means for the Universe as a whole. Whilst the problem is difficult to solve, we should be thankful that the Universe is getting older.

Despite being dependent on the Sun for life, star formation is known to have adverse effects on life, from reducing the chance of planets forming, to flooding solar systems with harmful radiation, or simply exploding. So, if there were too many stars, we might not even be here to contemplate these questions.

Feature image credit: The Carina Nebula taken with the James Webb Space Telescope